![[object Object]](/webp-images/home/Hero/RBI Report New3.webp)

![[object Object]](/webp-images/home/Hero/Homepage Hero Mobile Banners/RBI Report Made Easy New3 Mobile.webp)

We innovate to simplify complexity and unlock new possibilities. Through modern data platforms, cloud-native architectures, and a culture of continuous experimentation, we build solutions that don’t just keep up with change — they lead it. When innovation is purposeful, real impact follows.

Cloud and Infrastructure:

Optimize. Accelerate. Uptime.

At Ahana, we deliver tailored IT solutions to help businesses build robust infrastructures and achieve seamless cloud integration. With experience across diverse industries, including BFSI, logistics, manufacturing, and more, our personalized approach addresses each client’s unique needs. We provide scalable and secure solutions designed to drive efficiency and growth. Our skilled team offers top-tier services in infrastucture and cloud consulting, design, and deployment and cloud migration and optimisation.

Data At The Core

Raw data to real impact

Data & Analytics Advisory

Data Modernization

Data Management

Data Governance

Democratizing Technologies across Industries

Modern, scalable, and high-performing solutions. Whether it's cloud-native architectures, real-time analytics, or seamless integrations, we create the foundation for resilient and future-proof digital ecosystems.

Fifteen years ago, Ahana began with a simple belief: technology should enable growth, not create complexity. What started as a focused IT services firm has evolved into a trusted digital transformation partner across industries and geographies.

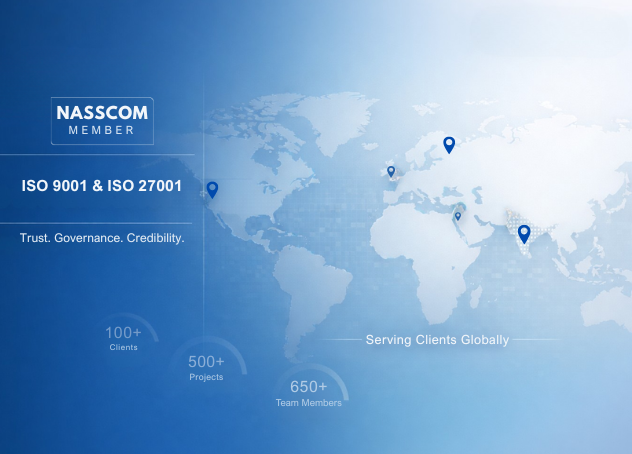

Growing alongside our clients, we adapted to new technologies, expanded capabilities, and raised delivery standards. Today, Ahana works with 100+ clients, delivers across 20+ technologies, and has completed 500+ projects, powered by a team of 650+ professionals across data, cloud, automation, and application services. From modernizing data infrastructure to automating workflows and simplifying analytics, we turn technology into a competitive advantage.

Our journey is marked by key milestones—becoming a NASSCOM member in 2016 and joining the NASSCOM Karnataka Regional Council in 2025. Our strong focus on quality, security, and governance is reinforced through ISO 9001 and ISO 27001 certifications. What remains constant is our mindset—to build secure, scalable, future-ready technology that works as hard as our clients do, without the complexity.

Because evolution isn’t just about how far we’ve come—it’s about being ready for what’s next.

Our Clients

We thrive by putting our clients and their success at the heart of everything we do.

Client Testimonial

Ahana has been a huge help with their Managed Services for our MSSQL, Sybase ASE, Sybase Replication, and Document DB environments. Chethan and his team have been fantastic-they keep everything running smoothly and quickly fix any issues. Their approach to updates and deployments is excellent, making sure our systems work perfectly. We really appreciate their hard work and expertise-it's made a big difference for us. Ahana has played an important role in optimizing our database infrastructure.Ahana has become a trusted partner for our team, and we look forward to continuing our successful partnership with them.

Vinod Kochu

VP-Kotak Securities

JK Tyre is a consumer facing company and we put great emphasis on customer satisfaction. Working with a partner like Ahana that has proven capabilities in AI and ML, we have managed to completely digitise our warranty claims processing system. This has resulted in reduced TAT for our customers and greater customer satisfaction.

Sharad Agarwal

CDIO, JK Tyre

Ahana’s MSSQL Managed Support has been a game-changer for our database operations. Their team is highly knowledgeable, proactive, and quick to respond. From performance tuning to routine maintenance, Ahana consistently ensures our databases run smoothly and securely. We now enjoy improved uptime, faster query performance, and complete peace of mind. Our internal team can now focus on strategic initiatives instead of firefighting. Their proactive optimization, timely upgrades, and clear communication have improved performance and reduced operational costs. Ahana truly understands what enterprise-grade support means.

Jegatheeswaran Palsamy

Head Of Information Technology - Orient bell

At Karnataka Bank, we faced challenges that required a partner who could take full ownership of our managed services, especially in areas like patch management and system upgrades. Their quick learning curve and empathetic approach have helped us tackle longstanding challenges, related to end-of-life systems and patch management. Ahana's responsiveness and understanding have not only reduced our downtime but also contributed to smoother banking operations and better customer experiences.

Venkat Krishnan

Chief Information Officer - Karnataka Bank

ICICI Prudential has partnered with Ahana for more than four years and they are playing a vital role in our digital transformation journey. They have brought their expertise in Database Services, Infrastructure Management, Business Analytics, and Data Engineering to help us to envision, develop, and enhance our Enterprise Data Warehouse platform. What sets Team Ahana apart from others is their team of experienced professionals who approach challenges with a positive and proactive attitude.

Ganessan Soundiram

Chief Technology Officer - ICICI Prudential Life Insurance

Partnering with Ahana has transformed our IT operations. In a fast-paced fintech environment, reliability, security, and speed are critical — and Ahana consistently delivers. Their on-site engineer has become a key part of our workflow, managing laptops, networks, onboarding, and endpoint security with ease. Their mix of strong technical expertise and exceptional service stands out. Issues are resolved quickly, systems run smoothly, and their support is always professional. Ahana has built a secure, efficient IT backbone for us, and we highly recommend them as a trusted technology partner.

Bipin Toro

VP Engg - CheQ

Ahana’s MSSQL Managed Support has been a game-changer for our database operations. Their team is highly knowledgeable, proactive, and quick to respond. From performance tuning to routine maintenance, Ahana consistently ensures our databases run smoothly and securely. We now enjoy improved uptime, faster query performance, and complete peace of mind. Our internal team can focus on strategic initiatives instead of firefighting. Their proactive optimization, timely upgrades, and clear communication have improved performance and reduced operational costs. Ahana truly understands what enterprise-grade support means.

Jegatheeswaran Palsamy

DGM- IT - Orient Bell

Ahana has been a huge help with their Managed Services for our MSSQL, Sybase ASE, Sybase Replication, and Document DB environments. Chethan and his team have been fantastic-they keep everything running smoothly and quickly fix any issues. Their approach to updates and deployments is excellent, making sure our systems work perfectly. We really appreciate their hard work and expertise-it's made a big difference for us. Ahana has played an important role in optimizing our database infrastructure.Ahana has become a trusted partner for our team, and we look forward to continuing our successful partnership with them.

Vinod Kochu

VP-Kotak Securities

JK Tyre is a consumer facing company and we put great emphasis on customer satisfaction. Working with a partner like Ahana that has proven capabilities in AI and ML, we have managed to completely digitise our warranty claims processing system. This has resulted in reduced TAT for our customers and greater customer satisfaction.

Sharad Agarwal

CDIO, JK Tyre

Ahana’s MSSQL Managed Support has been a game-changer for our database operations. Their team is highly knowledgeable, proactive, and quick to respond. From performance tuning to routine maintenance, Ahana consistently ensures our databases run smoothly and securely. We now enjoy improved uptime, faster query performance, and complete peace of mind. Our internal team can now focus on strategic initiatives instead of firefighting. Their proactive optimization, timely upgrades, and clear communication have improved performance and reduced operational costs. Ahana truly understands what enterprise-grade support means.

Jegatheeswaran Palsamy

Head Of Information Technology - Orient bell

At Karnataka Bank, we faced challenges that required a partner who could take full ownership of our managed services, especially in areas like patch management and system upgrades. Their quick learning curve and empathetic approach have helped us tackle longstanding challenges, related to end-of-life systems and patch management. Ahana's responsiveness and understanding have not only reduced our downtime but also contributed to smoother banking operations and better customer experiences.

Venkat Krishnan

Chief Information Officer - Karnataka Bank

ICICI Prudential has partnered with Ahana for more than four years and they are playing a vital role in our digital transformation journey. They have brought their expertise in Database Services, Infrastructure Management, Business Analytics, and Data Engineering to help us to envision, develop, and enhance our Enterprise Data Warehouse platform. What sets Team Ahana apart from others is their team of experienced professionals who approach challenges with a positive and proactive attitude.

Ganessan Soundiram

Chief Technology Officer - ICICI Prudential Life Insurance

Partnering with Ahana has transformed our IT operations. In a fast-paced fintech environment, reliability, security, and speed are critical — and Ahana consistently delivers. Their on-site engineer has become a key part of our workflow, managing laptops, networks, onboarding, and endpoint security with ease. Their mix of strong technical expertise and exceptional service stands out. Issues are resolved quickly, systems run smoothly, and their support is always professional. Ahana has built a secure, efficient IT backbone for us, and we highly recommend them as a trusted technology partner.

Bipin Toro

VP Engg - CheQ

Ahana’s MSSQL Managed Support has been a game-changer for our database operations. Their team is highly knowledgeable, proactive, and quick to respond. From performance tuning to routine maintenance, Ahana consistently ensures our databases run smoothly and securely. We now enjoy improved uptime, faster query performance, and complete peace of mind. Our internal team can focus on strategic initiatives instead of firefighting. Their proactive optimization, timely upgrades, and clear communication have improved performance and reduced operational costs. Ahana truly understands what enterprise-grade support means.

Jegatheeswaran Palsamy

DGM- IT - Orient Bell

Ahana has been a huge help with their Managed Services for our MSSQL, Sybase ASE, Sybase Replication, and Document DB environments. Chethan and his team have been fantastic-they keep everything running smoothly and quickly fix any issues. Their approach to updates and deployments is excellent, making sure our systems work perfectly. We really appreciate their hard work and expertise-it's made a big difference for us. Ahana has played an important role in optimizing our database infrastructure.Ahana has become a trusted partner for our team, and we look forward to continuing our successful partnership with them.

Vinod Kochu

VP-Kotak Securities

JK Tyre is a consumer facing company and we put great emphasis on customer satisfaction. Working with a partner like Ahana that has proven capabilities in AI and ML, we have managed to completely digitise our warranty claims processing system. This has resulted in reduced TAT for our customers and greater customer satisfaction.

Sharad Agarwal

CDIO, JK Tyre

Ahana’s MSSQL Managed Support has been a game-changer for our database operations. Their team is highly knowledgeable, proactive, and quick to respond. From performance tuning to routine maintenance, Ahana consistently ensures our databases run smoothly and securely. We now enjoy improved uptime, faster query performance, and complete peace of mind. Our internal team can now focus on strategic initiatives instead of firefighting. Their proactive optimization, timely upgrades, and clear communication have improved performance and reduced operational costs. Ahana truly understands what enterprise-grade support means.

Jegatheeswaran Palsamy

Head Of Information Technology - Orient bell

At Karnataka Bank, we faced challenges that required a partner who could take full ownership of our managed services, especially in areas like patch management and system upgrades. Their quick learning curve and empathetic approach have helped us tackle longstanding challenges, related to end-of-life systems and patch management. Ahana's responsiveness and understanding have not only reduced our downtime but also contributed to smoother banking operations and better customer experiences.

Venkat Krishnan

Chief Information Officer - Karnataka Bank

ICICI Prudential has partnered with Ahana for more than four years and they are playing a vital role in our digital transformation journey. They have brought their expertise in Database Services, Infrastructure Management, Business Analytics, and Data Engineering to help us to envision, develop, and enhance our Enterprise Data Warehouse platform. What sets Team Ahana apart from others is their team of experienced professionals who approach challenges with a positive and proactive attitude.

Ganessan Soundiram

Chief Technology Officer - ICICI Prudential Life Insurance

Partnering with Ahana has transformed our IT operations. In a fast-paced fintech environment, reliability, security, and speed are critical — and Ahana consistently delivers. Their on-site engineer has become a key part of our workflow, managing laptops, networks, onboarding, and endpoint security with ease. Their mix of strong technical expertise and exceptional service stands out. Issues are resolved quickly, systems run smoothly, and their support is always professional. Ahana has built a secure, efficient IT backbone for us, and we highly recommend them as a trusted technology partner.

Bipin Toro

VP Engg - CheQ

Ahana’s MSSQL Managed Support has been a game-changer for our database operations. Their team is highly knowledgeable, proactive, and quick to respond. From performance tuning to routine maintenance, Ahana consistently ensures our databases run smoothly and securely. We now enjoy improved uptime, faster query performance, and complete peace of mind. Our internal team can focus on strategic initiatives instead of firefighting. Their proactive optimization, timely upgrades, and clear communication have improved performance and reduced operational costs. Ahana truly understands what enterprise-grade support means.

Jegatheeswaran Palsamy

DGM- IT - Orient Bell

Technology never stands still, and neither do we. By leveraging AI-driven automation, predictive analytics, and intelligent data processing, we help businesses stay ahead with agility, efficiency, and innovation at every stage.

Our Resources

Case Studies

Real problems. Real solutions. Real impact.

See how we help businesses embrace AI, automate smarter, and modernize for the future. Our success stories showcase faster workflows, smarter decisions, and real business growth.

View MoreBlogs

Expert insights, minus the jargon.

From AI breakthroughs to automation best practices, our thought leaders share what matters. Whether it's intelligent automation or enterprise transformation, we break it down so you can stay ahead.

View MoreNews & Events

Where innovation meets action.

Stay updated on Ahana's latest innovations, events, and expert insights. From keynotes to product updates, see how we're shaping the future of intelligent automation and enterprise tech.

View MoreTechnology Stack

Global partnerships that support co-creation, co-development of solutions and enable us to enhance our service offerings.

.webp)

.webp)

.webp)

From scattered data to strategic intelligence, we turn information into a powerful asset. By unlocking actionable insights, we empower businesses to make faster, smarter decisions that fuel growth and competitive advantage.

Why Ahana?

0+

Years

0+

Clients

0+

Technologies

0+

Services